IAM Heimdall

Secure, Verifiable IAM for AI Agents

Heimdall provides a secure, verifiable Identity and Access Management (IAM) framework specifically designed for AI agents, enabling them to operate with proper authentication, authorization, and accountability across digital services.

Table of Contents

- Introduction

- The Challenge: Identifying AI Agents

- Proposed Solution: A Dedicated Identity Layer

- How it Works: Core Components

- Key Concepts in Action

- Why IAM for AI Agents: Benefits

- Use Cases

Website: iamheimdall.com

Introduction - The Need for Agent Identity

Artificial Intelligence (AI) agents are transitioning from tools to autonomous actors, operating across the digital landscape on behalf of users. This evolution demands a robust way to manage their identity, permissions, and actions. Today’s methods—shared credentials, static API keys, or simple User-Agent strings—fall short, creating security risks and lacking the necessary control and accountability.

This Agent Identity Framework (AIF/Heimdall) addresses this gap by establishing a dedicated identity layer for AI agents. Built on proven standards like JWT and public-key cryptography, AIF provides a standardized, secure, and verifiable way for agents to identify themselves, prove their authorization, and interact responsibly with online services.

The Challenge - Identifying AI Agents

-

Authentication: How does a service (website, API) know it’s interacting with a legitimate AI agent versus a human, a simple bot, or a malicious actor? How can it verify the agent is acting with valid user consent?

-

Authorization:How can users and services grant agents specific, limited permissions instead of broad access via shared credentials or overly permissive API keys?

-

Accountability: How can actions taken by autonomous agents be reliably attributed back to the specific agent instance, its provider, and the delegating user for security and compliance?

-

Trust & Interoperability: How can services make informed decisions about interacting with diverse agents from various providers in a standardized way?

Current methods – relying on User-Agent strings (easily spoofed), IP addresses (unreliable), or sharing user credentials/static API keys – are inadequate, insecure, and non-standardized for the complex interactions autonomous agents will undertake.

Solution - A Dedicated Identity Layer

Heimdall is proposed as a standardized, secure, and verifiable identity layer specifically designed for AI agents, treating them as first-class digital entities. It aims to provide the foundational infrastructure for trust and accountability in the agent ecosystem.

Core Goals:

- Verifiable Identity: Provide a standard way to uniquely identify agent instances and their origins.

- Secure Delegation: Enable users to securely grant specific, revocable permissions to agents.

- Standardized Authorization: Allow services to reliably verify agent permissions.

- Transparent Accountability: Facilitate clear audit trails for agent actions.

How it Works - Core Components

Heimdall integrates proven web standards (URIs, JWT, PKI) with agent-specific concepts:

-

(AID) Agent ID: A structured URI (

aif://issuer/model/user-or-pseudo/instance) uniquely identifying each agent instance and its context (who issued it, what model, who delegated). Supports pseudonymity for user privacy. - (ATK) Agent Token: A short-lived, cryptographically signed JSON Web Token (JWT). The ATK acts as the agent’s “digital passport,” containing:

- The agent’s AID (sub claim).

- The issuing entity (iss claim).

- The intended audience/service (aud claim).

- Explicit, granular permissions granted to the agent (actions + conditions).

- The purpose of the delegation.

- Verifiable trust_tags indicating issuer reputation, capabilities, user verification level, etc. This is optional.

- (REG) Registry Service: A verification infrastructure (initially centralized/OSS, potentially federated later) where:

- Services retrieve Issuing Entity public keys to verify ATK signatures.

- Token revocation status can be checked.

- Issuer legitimacy can be confirmed.

-

(TRUST) Trust Mechanisms: A phased approach starting with verifiable attributes (trust_tags in the ATK) allowing services to assess agent trustworthiness based on concrete data, evolving potentially towards dynamic scoring.

- (SIG) Agent Signature: Standard asymmetric cryptography (Ed25519/ECDSA) used by Issuing Entities to sign ATKs, ensuring authenticity and integrity.

Key Concepts in Action

Key Entities in the Ecosystem

- User: The human principal delegating authority to an AI agent.

- Issuing Entity (IE): An organization authorized to issue AIDs and sign ATKs for agents (e.g., AI providers, agent application builders).

- AI/LLM Provider: The entity providing the underlying AI model capabilities.

- Service Provider (SP): Digital services, websites, or APIs that agents interact with.

- Registry Operator: Entity maintaining instances of the Registry Service (REG).

- Agent Builder: Developer or organization creating the agent application/service.

Sample Workflow

-

Delegation: A User authorizes an Agent Platform (Issuing Entity) to act on their behalf for a specific purpose with defined permissions (e.g., via an enhanced OAuth/OIDC flow). This can be extended to multi layer delegation - agent to agent.

-

Issuance: The Issuing Entity generates an AID and issues a signed ATK containing the AID, permissions, purpose, and trust tags.

-

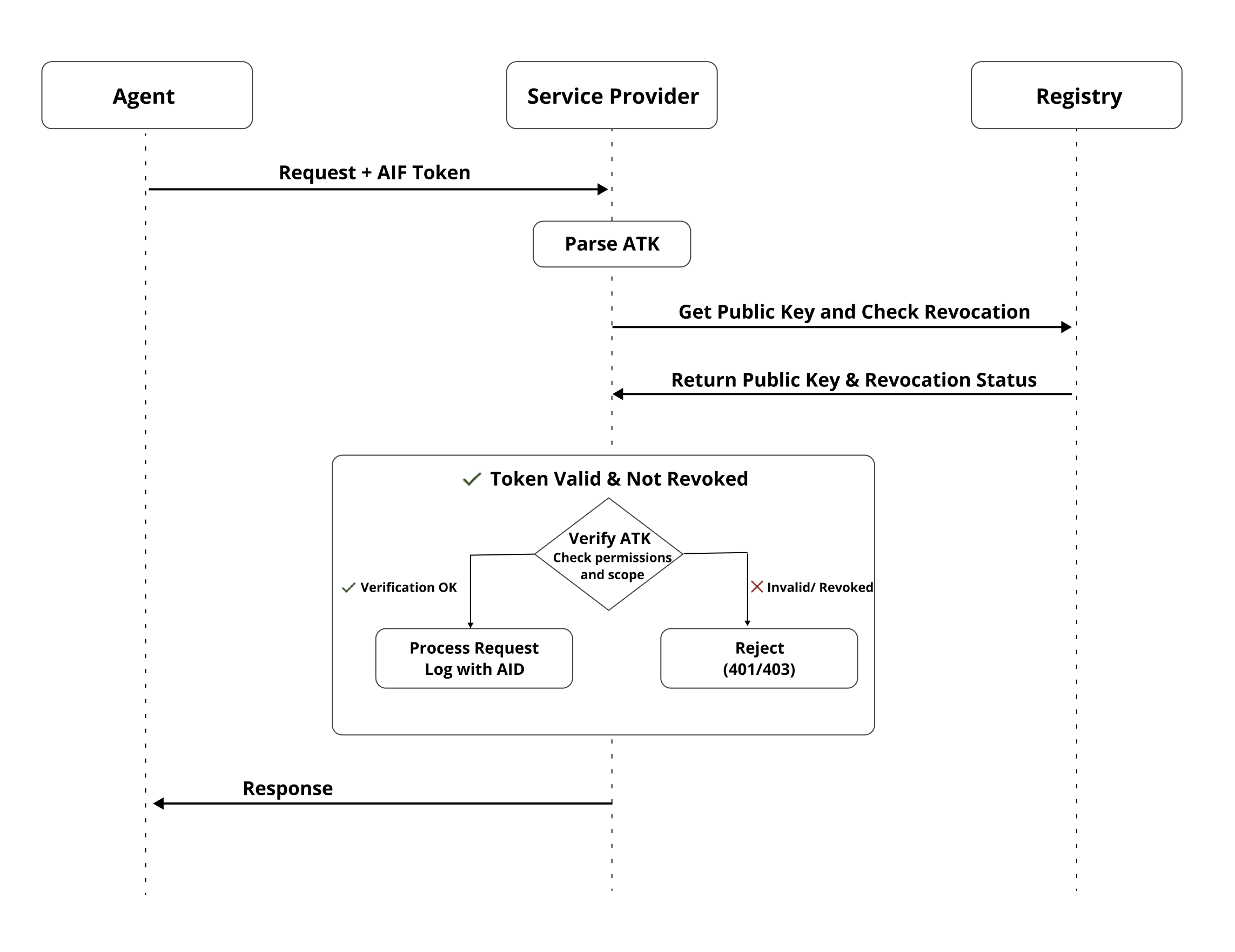

Interaction: The Agent presents its ATK (e.g., in an HTTP header) when interacting with a Service Provider.

-

Validation: The Service Provider:

- Retrieves the Issuing Entity’s public key from the REG service.

- Verifies the ATK’s signature and standard claims (expiry, audience).

- Checks the token’s revocation status via the REG.

- Evaluates the permissions claim against the requested action.

- Optionally uses trust_tags for risk assessment or policy decisions.

-

Accountability: The Service Provider logs the action with the verified AID and claims from the ATK.

Click image to view full size

Benefits

-

For Service Providers:

- Enhanced Security: Reliably distinguish legitimate, authorized agents from spoofed/unauthorized ones. Mitigate risks from credential sharing.

- Granular Control: Apply specific policies, rate limits, or access rules based on verifiable agent identity and permissions.

- Improved Auditability: Create trustworthy logs of agent actions for compliance and security analysis.

- Reduced Abuse: Identify and block misbehaving agents or those from untrusted sources.

- Analytics: Standardized way to gather analytics on agent traffic.

-

For Agent Builders & AI Providers:

- Build Trust: Signal legitimacy and security posture to users and services.

- Enable Access: Provide a standard way for agents to access services requiring verification.

- Differentiation: Stand out based on verifiable attributes and responsible practices.

-

For Users:

- Better Security: Reduced need to share primary credentials.

- Greater Control: Clearer understanding and management of permissions delegated to agents (via agent platforms).

- Increased Confidence: Assurance that agents act within defined boundaries.

Use Cases

Here are the key scenarios where Heimdall provides significant value:

Verifiable Agent Identification & Authentication

Scenario: A Service Provider (SP), like a financial API or a content platform, receives an incoming request. It needs to reliably determine the nature of the requestor. Is it the legitimate human user? Is it Agent Instance #123 delegated by that user? Is it Agent Instance #456 from a different platform acting for the same user? Or is it a malicious bot spoofing an agent's identity? Applying correct permissions, policies, and logging requires knowing who is truly acting.

Solution Principle: Agents require unique, verifiable digital identities, distinct from their delegating users. These identities must be cryptographically verifiable, allowing SPs to authenticate the specific agent instance making the request and differentiate it from other agents, users, or fraudulent actors.

Benefit: Enables service providers to reliably distinguish agent traffic, prevent identity spoofing, apply agent-specific policies (like rate limits or access to specialized endpoints), and build foundational trust necessary for more advanced interactions.

Alternatives & Gaps:

- User-Agent Strings: Trivially easy to fake; provide no cryptographic verification; offer limited, non-standardized information.

Gap: No verifiability, no unique identity. - IP Addresses: Unreliable identifiers (dynamic IPs, NAT, VPNs, shared cloud infrastructure); identifies network location, not the specific agent instance or its delegation context.

Gap: No specific identity, unreliable. - Shared User Credentials: Highly insecure; makes the agent indistinguishable from the user; grants excessive permissions; violates terms of service; prevents agent-specific control or audit.

Gap: Blurs identity, insecure, excessive privilege.

Licensing & Compliance Enforcement

Scenario: A software company licenses an API or dataset differently for direct human use versus automated use by AI agents. They need a reliable way to enforce these terms. Similarly, regulated industries may require proof that AI accessing sensitive data meets specific compliance standards (tied to the agent model or issuer).

Solution Principle: Access control policies check the verifiable agent identity. Policies can verify if the associated user/organization has the appropriate "agent access license". Claims within the ATK (aif_trust_tags) could also attest to the agent model's compliance certifications or the issuer's audited status.

Benefit: Enables more future ready and possibly sophisticated licensing models based on usage type (human vs. agent). Allows enforcement of compliance requirements by verifying agent/issuer attributes against policy before granting access to regulated data or functions.

Alternatives & Gaps:

- Terms of Service / Honor System: Relies on users/developers behaving correctly. Ineffective against deliberate misuse.

Gap: No enforcement. - Heuristic Usage Analysis: Trying to detect automated usage based on patterns. Can be unreliable, generate false positives/negatives.

Gap: Indirect, potentially inaccurate.

Secure & Verifiable Delegation of Authority

Scenario: An agent requests access to a user's private medical records via a healthcare API. The API provider needs irrefutable proof that the specific human user explicitly consented to this particular agent accessing this specific data for this specific purpose.

Solution Principle: There must be a cryptographically verifiable link between an agent's action and the explicit act of delegation by an authenticated principal (user or organization). This link should ideally capture the scope and purpose of the delegation.

Benefit: Establishes a clear, non-repudiable chain of authority. Protects users by ensuring their consent is explicitly tied to agent actions. Protects SPs by providing proof of authorization before granting access to sensitive resources or actions.

Alternatives & Gaps:

- Agent Self-Attestation: The agent merely claims it's authorized. Completely untrustworthy.

Gap: No verification. - API Key Implies Delegation: Assumes possession of a key equals authority for any action the key allows. Doesn't prove specific user consent for the agent's task.

Gap: No proof of specific user consent. - Standard OAuth Authorization Code Flow: Verifies user consent for the client application (Agent Platform) to access certain scopes. It doesn't inherently create a verifiable link to a specific agent instance or the fine-grained permissions/purpose of the delegation without significant, non-standard extensions.

Gap: Focuses on client app authorization, not specific agent instance delegation proof.

Transparent & Attributable Auditing

Scenario: A configuration change is made via API, causing an outage. Investigation reveals the change originated from an IP address associated with an Agent Builder platform. Was it Agent X acting for User A, Agent Y for User B, a rogue employee, or a compromised credential?

Solution Principle: Interactions involving agents must generate secure, detailed audit logs containing verifiable, unique identifiers that reliably attribute each action to the specific agent instance, its issuing platform/provider, the delegating principal, and ideally the task purpose.

Benefit: Enables accurate security forensics, incident response, performance analysis, compliance reporting, and dispute resolution by providing a clear, trustworthy record of "who did what, acting for whom, and why".

Alternatives & Gaps:

- Standard Web/API Logs: Grossly insufficient for attributing actions to specific agents or delegations.

Gap: Lacks verifiable agent/delegation identity. - OAuth Client ID Logging: Identifies the Agent Platform, but not the specific agent instance or user delegation behind the action.

Gap: Insufficient granularity. - Proprietary Platform Logging: Each Agent Builder might have internal logs, but the Service Provider needs its own verifiable logs based on the credentials presented to it.

Gap: Not standardized, not available/verifiable by SP.

Standardized Trust & Reputation Signals

Scenario: An SP wants to implement risk-based access control. It might trust an agent delegated by a user who authenticated with strong MFA more than one delegated after a simple email verification. It might trust agents issued by established, certified providers more than unknown ones.

Solution Principle: A standardized mechanism is needed to securely convey verifiable attributes about the agent's context, such as the issuer's reputation tier, the user verification method used during delegation, or declared agent capabilities. This allows SPs to build trust dynamically.

Benefit: Enables sophisticated risk-based policies, incentivizes responsible practices by Issuing Entities, promotes a healthier ecosystem by allowing differentiation based on verifiable trust signals.

Alternatives & Gaps:

- IP Reputation/Geo-IP: Irrelevant for assessing delegation trust or agent capability.

Gap: Wrong signals. - Manual SP Due Diligence/Allowlisting: Doesn't scale to a large number of Issuing Entities/Agent Builders. Subjective.

Gap: Not scalable, not standardized. - Proprietary Risk Scores/Signals: Leads to fragmentation; lacks transparency and interoperability.

Gap: Not standardized, opaque.

Differentiating Agent Access from Abuse

Scenario: A popular e-commerce site or event ticketing platform is plagued by sophisticated bots scraping pricing data excessively or attempting to hoard limited inventory faster than human users can react. Blocking based on IP or simple CAPTCHAs is often ineffective.

Solution Principle: Implement policies that differentiate access based on verifiable agent identity. Legitimate agents present verifiable credentials. Unverifiable or anonymous automated traffic can be strictly rate-limited or blocked.

Benefit: Allows SPs to welcome beneficial automation while effectively mitigating abusive automation. Protects platform integrity and ensures fairer access for human users.

Alternatives & Gaps:

- Advanced Bot Detection: Can be effective but often results in an arms race; may block legitimate automation or inconvenience humans.

Gap: Focuses on blocking bad behavior, not enabling good automation. - Strict Rate Limiting: Can throttle legitimate use cases along with abuse.

Gap: Indiscriminate. - Proof-of-Work/CAPTCHA: Adds friction, potentially solvable by sophisticated bots.

Gap: Friction, potentially ineffective.

Physical Devices & IoT Interactions

Scenario: A user wants their AI assistant agent to control smart home devices (lights, thermostat, locks) via the device manufacturer's cloud API. The API needs assurance that the command originates from an entity genuinely authorized by the homeowner.

Solution Principle: The agent authenticates to the IoT platform's API using a verifiable identity token that proves it was delegated by the registered homeowner with specific permissions (e.g., {"action": "set_thermostat", "device_id": "thermo123", "conditions": {"min_temp": 18, "max_temp": 25}}).

Benefit: Enables secure, delegated control of physical systems via AI agents, preventing unauthorized access or manipulation while providing an audit trail tied to the specific agent and user delegation.

Alternatives & Gaps:

- Shared API Keys per User: If the key leaks from one agent/app, all devices are compromised. Lacks granularity.

Gap: Security risk, no granular control. - Standard OAuth per Device/Platform: Better, but the SP still only sees the "Agent Platform" client ID, not the specific agent instance or task purpose.

Gap: Lacks agent-specific identity and context.

Verifiable Identity in Agent-Initiated Communication

Scenario: An agent initiates a phone call or sends an email/chat message on behalf of a user (e.g., appointment scheduling, customer service inquiry). The recipient needs to know if the communication is genuinely from an authorized agent representing that user or if it's spam/phishing.

Solution Principle: The communication protocol incorporates or references a verifiable agent credential. For calls, this could be integrated via STIR/SHAKEN extensions or call setup protocols. For email/chat, headers or embedded tokens could carry the verifiable assertion.

Benefit: Allows recipients to verify the legitimacy of agent-initiated communications, filter spam/impersonation attempts, prioritize trusted interactions, and access relevant context about the agent's purpose.

Alternatives & Gaps:

- No Verification: Recipient relies on heuristics, caller ID number (spoofable), email headers (spoofable), or content analysis. Prone to spam and phishing.

Gap: No reliable verification. - Proprietary Platform Markers: E.g., Google Duplex identifying itself verbally. Not standardized, not cryptographically verifiable, limited to specific platforms.

Gap: Not standard, not verifiable.

Secure Agent Lifecycle Management

Scenario: An agent instance is long-running, but the user's circumstances change (e.g., they leave the company that delegated the agent, or they explicitly revoke permission for a specific task). How can access be reliably terminated? How can agent software be securely updated?

Solution Principle: While tokens are short-lived, the underlying agent identity and its association with the delegating user/principal need lifecycle management. A robust verifiable revocation mechanism is the key.

Benefit: Provides mechanisms beyond token expiry for managing agent authorization over time, responding to changes in user status or explicit revocation requests, and potentially tracking agent software versions via metadata linked to the agent identity.

Alternatives & Gaps:

- Relying Solely on Token Expiry: Doesn't handle immediate revocation needs.

Gap: Lacks immediate revocation. - Proprietary Agent Management Platforms: Each builder creates their own lifecycle system.

Gap: Not standardized, no interoperable revocation signal to SPs. - OAuth Refresh Token Revocation: Revokes the platform's ability to get new tokens, but doesn't target specific agent instances or delegations granularly.

Gap: Coarse-grained revocation.

Reference: This framework is informed in part by “Authenticated Delegation and Authorized AI Agents” (South et al., 2024), which introduced a structured approach to delegating authority from users to AI systems through extensions to OAuth 2.0 and OpenID Connect.